The Transform Technology Summits start October 13th with Low-Code/No Code: Enabling Enterprise Agility. Register now!

(adsbygoogle = window.adsbygoogle || []).push({});

Eight months in, 2021 has already become a record year in brain-computer interface (BCI) funding, tripling the $97 million raised in 2019. BCIs translate human brainwaves into machine-understandable commands, allowing people to operate a computer, for example, with their mind. Just during the last couple of weeks, Elon Musk’s BCI company, Neuralink, announced a $205 million in Series C funding, with Paradromics, another BCI firm, announcing a $20 million Seed round a few days earlier.

Almost at the same time, Neuralink competitor Synchron announced it has received the groundbreaking go-ahead from the FDA to run clinical trials for its flagship product, the Stentrode, with human patients. Even before this approval, Synchron’s Stentrode was already undergoing clinical trials in Australia, with four patients having received the implant.

(Above: Synchron’s Stentrode at work.)

(Above: Neurlink demo, April 2021.)

Yet, many are skeptical of Neuralink’s progress and the claim that BCI is just around the corner. And though the definition of BCI and its applications can be ambiguous, I’d suggest a different perspective explaining how breakthroughs in another field are making the promise of BCI a lot more tangible than before.

BCI at its core is about extending our human capabilities or compensating for lost ones, such as with paralyzed individuals.

Companies in this space achieve that with two forms of BCI — invasive and non-invasive. In both cases, brain activity is being recorded to translate neural signals into commands such as moving items with a robotic arm, mind-typing, or speaking through thought. The engine behind these powerful translations is machine learning, which recognizes patterns from brain data and is able to generalize those patterns across many human brains.

Pattern recognition and transfer learning

The ability to translate brain activity into actions was achieved decades ago. The main challenge for private companies today is building commercial products for the masses that can find common signals across different brains that translate to similar actions, such as a brain wave pattern that means “move my right arm.”

This doesn’t mean the engine should be able to do so without any fine tuning. In Neuralink’s MindPong demo above, the rhesus monkey went through a few minutes of calibration before the model was fine-tuned to his brain’s neural activity patterns. We can expect this routine to happen with other tasks as well, though at some point the engine might be powerful enough to predict the right command without any fine-tuning, which is then called zero-shot learning.

Fortunately, AI research in pattern detection has made huge strides, specifically in the domains of vision, audio, and text, generating more robust techniques and architectures to enable AI applications to generalize.

The groundbreaking paper Attention is all you need inspired many other exciting papers with its suggested ‘Transformer’ architecture. Its release in late 2017 has led to multiple breakthroughs across domains and modalities, such as with Google’s ViT, DeepMind’s multimodal Perceiver, and Facebook’s wav2vec 2.0. Each one has achieved state-of-the-art results in its respective benchmark, beating previous techniques for solving the task at hand.

One key trait of the Transformer architecture is its zero- and few-shot learning capabilities, which make it possible for AI models to generalize.

Abundance of data

State-of-the-art deep learning models such as the ones highlighted above from Google, DeepMind, and Facebook, require massive amounts of data. As a reference, OpenAI’s well-known GPT-3 model, a Transformer able to generate human-like language, was trained using 45GB of text, including the Common Crawl, WebText2, and Wikipedia datasets.

Online data is one of the major catalysts fueling the recent explosion in computer-generated natural-language applications. Of course, EEG (electroencephalography) data is not as readily available as Wikipedia pages, but this is starting to change.

Research institutions worldwide are publishing more and more BCI-related datasets, allowing researchers to build on one another’s learnings. For example, researchers from the University of Toronto used the Temple University Hospital EEG Corpus (TUEG) dataset, consisting of clinical recordings of over 10,000 people. In their research, they used a training approach inspired by Google’s BERT natural-language Transformer to develop a pretrained model that can model raw EEG sequences recorded with various hardware and across various subjects and downstream tasks. They then show how such an approach can produce representations suited to massive amounts of unlabelled EEF data and downstream BCI applications.

Data collected in research labs is a great start but might fall short for real-world applications. If BCI is to accelerate, we will need to see commercial products emerge that people can use in their daily lives. With projects such as OpenBCI making affordable hardware available, and other commercial companies now launching their non-invasive products to the public, data might soon become more accessible. Two examples include NextMind, which launched a developer kit last year for developers who want to write their code on top of NextMind’s hardware and APIs, and Kernel, which plans to release its non-invasive brain recording helmet Flow soon.

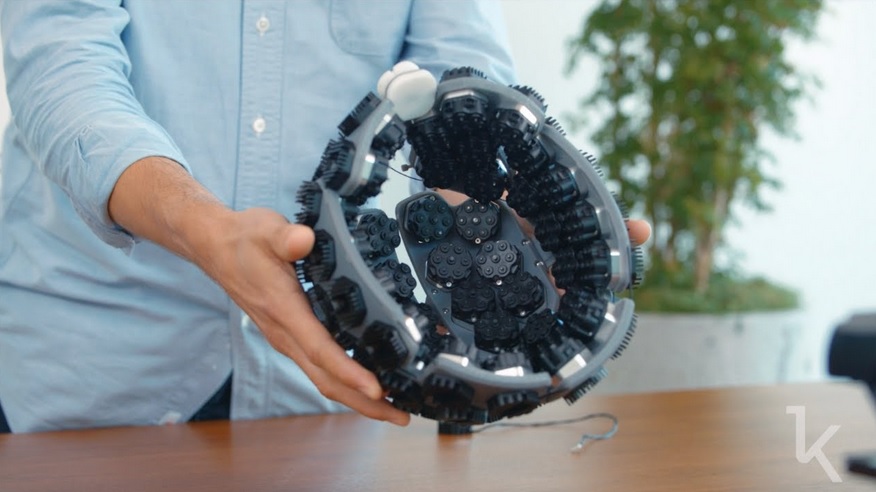

(Above: Kernel’s Flow device.)

(Above: Kernel’s Flow device.)

Hardware and edge computing

BCI applications have the constraint of operating in real-time, as with typing or playing a game. Having more than one-second latency from thought to action would create an unacceptable user experience since the interaction would be laggy and inconsistent (think about playing a first-person shooter game with a one-second latency).

Sending raw EEG data to a remote inference server to then decode it into a concrete action and return the response to the BCI device would introduce such latency. Furthermore, sending sensitive data such as your brain activity introduces privacy concerns.

Recent progress in AI chips development can solve these problems. Giants such as Nvidia and Google are betting big on building smaller and more powerful chips that are optimized for inference at the edge. This in turn can enable BCI devices to run offline and avoid the need to send data, eliminating the latency issues associated with it.

Final thoughts

The human brain hasn’t evolved much for thousands of years, while the world around us has changed massively in just the last decade. Humanity has reached an inflection point where it must enhance its brain capabilities to keep up with the technological innovation surrounding us.

It’s possible that the current approach of reducing brain activity to electrical signals is the wrong one and that we might experience a BCI winter if the likes of Kernel and NextMind don’t produce promising commercial applications. But the potential upside is too consequential to ignore — from helping paralyzed people who have already given up on the idea of living a normal life, to enhancing our everyday experiences.

BCI is still in its early days, with many challenges to be solved and hurdles to overcome. Yet for some, that should already be exciting enough to drop everything and start building.

Sahar Mor has 13 years of engineering and product management experience focused on AI products. He is the founder of AirPaper, a document intelligence API powered by GPT-3. Previously, he was founding Product Manager at Zeitgold, a B2B AI accounting software company, and Levity.ai, a no-code AutoML platform. He also worked as an engineering manager in early-stage startups and at the elite Israeli intelligence unit, 8200.

VentureBeat

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative technology and transact.

Our site delivers essential information on data technologies and strategies to guide you as you lead your organizations. We invite you to become a member of our community, to access:

- up-to-date information on the subjects of interest to you

- our newsletters

- gated thought-leader content and discounted access to our prized events, such as Transform 2021: Learn More

- networking features, and more